In every country, election season is a critical, complex, and often polarizing event. 2024 marks a landmark election year as over 60 countries across the globe take to the polls - ensuring that 49% of the people on Earth will be casting their ballot this year. With such high stakes, election security is a top priority for every country hoping to host an informed, fair, and peaceful electoral process. However, the rapid growth of technology has created a new era of cyber fraud that threatens to upheave election security from various angles.

As the US and many other countries’ elections draw to a close, a large focus of global attention has been put on election security. In this blog article, we look at the ways that AI, deep fake technology, and social engineering attacks have been used - and might be used again - to interfere in elections, disrupt voting, or spread misinformation. First, let’s try to understand how emerging cyber fraud is a threat to election security.

Rising Cyber Fraud Affecting Election Security

Cyber fraud against election security typically involves phishing, data breaches, and social engineering attacks. While these are usually aimed at mining private data to sell online, these crimes can also be politically motivated and used to extort voters or target specific groups. Another emerging cyber threat to election security in the last few years has been the widespread use of generative Artificial Intelligence (AI) and deep fake technology. These methods have been worryingly present across the globe to manipulate voters, spread propaganda, and disrupt electoral processes.

As voters battle a war of ideologies, policies, and morals, cyber fraud has also become a jarringly effective tool to exploit vulnerabilities in election infrastructure - targeting electronic voting systems and voter databases. According to CISA, generative AI capabilities will likely not introduce new risks in the 2024 election cycle but may amplify existing risks to election infrastructure. These cyber-attacks could be carried out by foreign entities looking to destabilize elections, hacktivists, state enemies, or regular criminals looking to disrupt the peace.

Unfortunately, cyber fraud against election security doesn’t even need to be successful for it to be disruptive. Security and transparency are inherent elements of democratic elections - meaning that once the integrity of an election is even slightly compromised by cyber fraud, the entire electoral process comes under suspicion. This can lead to massive destabilization, riots, and erosion of social cohesion. Now that we know how and why cyber fraud can complicate and obstruct election security, let’s look further into the use of AI to commit cyber fraud during elections.

AI allows users to significantly increase the scale, scope, and speed of their activities – even if a user is focused on antisocial and harmful goals. Social engineering tactics, including phishing and misinformation campaigns, pose significant risks to the integrity of electoral processes already. The interconnected nature of technology in modern elections amplifies the potential impact of these threats and the speed at which AI tools process information exponentially increases these impacts.

AI Cyber Fraud During Elections

Generative AI leverages deep learning techniques and neural networks that replicate and create content which are almost identical to human content. This means that it can be used to create text, images, videos, and more from a single prompt. While AI has gotten a fair share of backlash from the general public for its use in art and the media at the expense of human artists, it also receives criticism for its ability to enable hacking and the spread of malware.

AI can be used to construct and spread misinformation - especially during election season. With such a wide scope of voters to influence and the ease at which social media spreads every type of provocative content, AI has been crucial in creating misinformation campaigns to cast doubt on election processes and sway voter opinions. While major social media platforms do try to stop the spread of AI-generated political misinformation, many still regard their hands as tied in the matter. This allows AI algorithms to generate and spread massive amounts of disinformation within seconds - making it harder to detect and combat.

However, social media platforms do hold a large share of the burden of blame for the spread of AI disinformation by actively suppressing political content. Social media giant, Meta, said it would no longer “proactively recommend content about politics” - this includes topics “potentially related to things like laws, elections, or social topics.” While suppression doesn’t necessarily equal misinformation, many people use social media to find out about news and current events - which would be skewed by the suppression of political content.

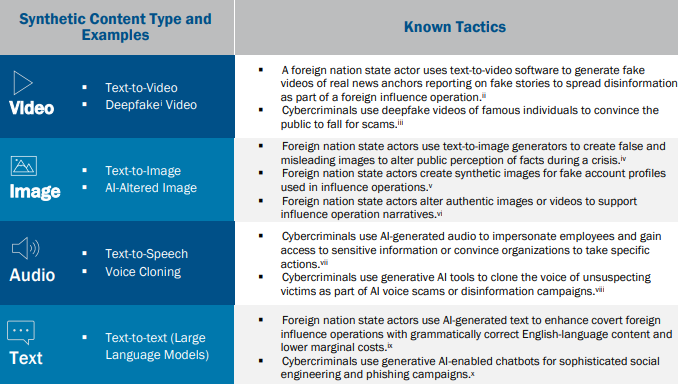

Examples of how malicious actors have used generative AI capabilities. Sourced from CISA.

Last year in the US, accounts affiliated with the campaigns of both Donald Trump and Ron DeSantis shared videos with AI-generated content to undermine each other’s candidacy. DeSantis released an AI-generated image in his video depicting Donald Trump hugging Dr. Anthony Fauci - who led the federal government’s COVID-19 response and remains deeply unpopular among critics of pandemic mitigation measures.

In a 2023 report released by Freedom House, a human rights advocacy group, researchers documented the use of generative AI in 16 countries “to sow doubt, smear opponents, or influence public debate.” The group goes on to state that AI can serve as an amplifier of digital repression - making censorship, surveillance, and the creation and spread of disinformation easier, faster, cheaper, and more effective. Now, let’s turn our attention to deep fake technology and its role in threatening election security.

Deep Fake Cyber Fraud During Elections

Deep fakes are often the most dangerous form of cyber fraud for politicians. With AI rapidly progressing, gone are the days when an alarming number of fingers and uncanny valley faces could help viewers distinguish between real and deep fake. As a result, several deep fake videos are now not only widespread but disturbingly convincing.

CISA lists the creation of deepfakes and synthetic media as one of the main Tactics of Disinformation during election periods. Deep fake technology involves the use of synthetic media content - such as photos, videos, and audio clips - that have been digitally manipulated or entirely fabricated to mislead the viewer. These deep fakes can then be used as part of disinformation campaigns to sway voters, spread propaganda, and cause disruptions.

Reuters reported that about 500,000 videos and voice deep fakes will be shared on social media sites globally in 2023 and that while voice cloning features used to cost up to US$ 10,000 until late last year, startups now offer it for only a few dollars. In the US, deep fake videos have caused uproars for political campaigns several times over. Earlier this year, a deepfake of former President Biden’s voice made as many as 25,000 calls telling recipients not to bother voting mere days before the New Hampshire primary.

In 2023, a deep fake video surfaced depicting Biden making transphobic comments and spread rapidly across social media. Also in 2023, the Republican National Committee published a 30-second AI-generated ad that used fake images to suggest a cataclysmic future if Biden were reelected - with San Francisco being shut down by crime. Another study that looked at the social media accounts of all French parties during the election campaigns found that far-right parties were particularly prone to using deep fakes.

During the 2024 elections in India, the Washington Post ran a story about Divyendra Singh Jadoun, a man famous for using artificial intelligence to create Bollywood sequences and TV commercials. However, Jadoun states that hundreds of politicians have been clamoring for his services - with more than half asking for “unethical” AI-generated content to smear the opposition. Some campaigns have even requested low-quality fake videos of their own candidates, which could be released to cast doubt on any damning real videos that emerge during the election.

In Slovakia in 2023, a seemingly damning audio clip began circulating widely on social media just two days before the country’s election. In this deep fake audio clip, the country’s Progressive party leader, Michal Šime č ka, appeared to be discussing how to rig the election, partly by buying votes from the country’s marginalized Roma minority.

On the 4th of November, CBS reported that the FBI has warned of deepfake videos ahead of the US elections as well. The Federal Bureau of Investigation warned the public about two videos falsely claiming to be from the FBI on election security. One mentions the apprehension of groups committing ballot fraud and the other has to do with the second gentleman Doug Emhoff. The bureau stresses that the videos are not authentic, are not from the FBI and the content they depict is false.

With growing political tensions across the globe, many people take to social media to vent their frustrations and stay updated. Unfortunately, deep fake technology has created a festering cycle of cyber fraud that threatens the fundamental security of election processes. Now, let’s see how social engineering attacks are used to impact election security as well.

Social Engineering Cyber Fraud During Elections

Social engineering attacks are used to exploit and manipulate people into divulging private information. This is often done by impersonating someone of authority to gain a victim’s trust and then using their credentials to gain access to privileged information or systems - which would be easily avoided through the use of a robust network firewall. Election processes and voting registration systems store and obtain large amounts of personal data to verify credentials and voting eligibility. Unfortunately, threat actors can use this process to steal private information for monetary gain or extortion.

Most social engineering attacks on election security involve spear phishing attacks in which hackers will pretend to be candidates, journalists, election supervisors, or campaign members and seek access to sensitive information or funding. A reliance on technology for most election processes makes the general public more susceptible to these attacks and more willing to simply give away their information online.

Phishing campaigns can be in the form of emails, phone calls, texts, and more - often targeting the elderly and often extended over a long period to build trust with the victim. AI tools are often used to create automated phishing scripts or even generate malware code. Something that a credible Network Threat Detection and Response ( NDR ) platform would be able to proactively prevent. Voice cloning technology can also be used to generate fake voter calls and overwhelm call centers.

The Wall Street Journal reported that researchers are seeing an uptick in Distributed Denial-of-Service (DDoS) attacks against websites that provide voter information and registration portals - where hackers will flood websites with traffic to knock them offline. Social engineering attacks can also lead to the disruption of election management systems, manipulation of voter registration databases, and unauthorized access to voting machine software or configurations.

A key risk of social engineering cyber fraud is the leaking of sensitive data such as ID numbers, social security numbers, phone numbers, addresses, and more. Campaigns and politicians are also vulnerable to social engineering attacks where hackers might try to leak sensitive campaign strategies or communications or spread disinformation from trusted sources.

Just last month, Georgia officials said that they thwarted an attempt to flood the state’s absentee voter portal - which would have possibly led the site to crash. The secretary of the state’s office could see that at least 420,000 IP addresses were trying to access the site at the same time - fortunately, no data was breached and the system returned to normal within 30 minutes.

In another instance of social engineering cyber fraud, the U.S. Election Assistance Commission, a federal agency that sets guidelines for running elections, notified voters in October that it had received reports of emails impersonating the agency. These phishing messages tried to harvest personal data by asking people to confirm their voter registration - prompting them to review and confirm their voter registration application to complete the process.

Another example of this attack method can be seen during the May 2014 Ukrainian presidential election, where purported pro-Russian hacktivists CyberBerkut claimed credit for a series of malicious activities against the Ukrainian Central Election Commission (CEC) - including a system compromise, destruction of vital data and systems including vote tabulation software, a data leak, a DDoS attack, and an attempted defacement of the CEC website with fake election results.

Before the start of 2024, an individual known as Jimbo disclosed details regarding a suspected breach of the General Elections Commission of Indonesia's (KPU) information system. The breach reportedly involved a substantial database containing records of numerous citizens, totaling 252,327,304 entries. Jimbo has put this database up for sale at a price of US $74,000 with the data set in question said to include passport details and other private information.

All these social engineering attacks might scare the public and make them question the integrity of their political candidates, election process, and government. However, a few threat actors should not be the reason we fear our democratic right to vote freely and fairly.

Cybersecurity Tips for the Public to Ensure Election Security

Here are some tips for the general public to stay safe from cyber fraud during election periods:

- Validate and verify all the political content you see and share on social media.

- Be wary of videos and images posted by unverified sources or vindictive social media accounts.

- Research author and outlet credentials before believing facts or stories.

- Do not provide any campaign members or politicians with personal information or funds without proper verification.

- Be wary of links and attachments from election officials or candidates.

- Make use of effective endpoint security to secure your network from malware and external threats.

- Engage with trustworthy news sources and social media accounts.

However, while we can do as much as we can as the general public, it’s up to much larger organizations to take the necessary steps to prevent cyber fraud from threatening election security.

Proactive Cybersecurity Measures: How Organizations Can Practice Cyber Resilience During Elections

For most election organizations, cybersecurity is often an afterthought when dealing with policymaking and campaigning. However, these processes are integral to maintaining a safe and secure electoral process - thereby instilling faith and trust in the people you wish to govern. While most organizations can choose to simply invest in managed security services and focus on politics, there are other ways organizations, election authorities, technology providers, and policymakers can be proactive in election security and preventing cyber fraud:

- Promoting a culture of transparency with voters and the electoral process.

- Vetting campaign officials and members to weed out any insider threats.

- Implementing robust and proactive cybersecurity platforms - such as Next-Generation Firewalls ( NGFW) to maintain the integrity of critical infrastructure.

- Conducting regular security audits to find weak areas or vulnerabilities.

- Establishing an incident response plan to mitigate threats and damage efficiently.

- Introducing a system of Zero-Trust to maintain access control and reduce the risk of unauthorized access.

- Using human authentication tools such as CAPTCHAs and biometric verification.

- Communicating with the public and your campaign about procedural communications so they do not engage in phishing scams.

- Ensuring that campaign officials, party members, and officials all have the right cybersecurity training and knowledge of AI-generated threats.

- Creating public awareness campaigns about the threat of social engineering attacks throughout the campaign.

- Using a Managed Detection and Response (MDR) to outsource cybersecurity needs so you can focus on your campaign. Now, you might be thinking “ What is MDR and how is that going to help my candidate win the election?” It’s not, but it will ensure that your cybersecurity posture is in the right place to provide the public with a fair and free election.

- Using risk-limiting audits to ensure the election goes as planned and that there is full transparency along the way.

- Collaboration between government agencies, cybersecurity experts, and the public. In the US, CISA works to provide election stakeholders with the information they need to manage risk to their systems and assets.

- Enable Multi-Factor Authentication on all relevant devices.

- Obtaining a “.Gov” domain to allow the public to interact with a verified website and official organization.

Implementing these proactive cybersecurity measures and anticipating potential vulnerabilities will help organizations maintain secure, fair, and reliable elections. As we move to vote for a better future, let’s ensure that we make use of the right tools and practices to keep that future safe as well. Cyber fraud is a growing concern to election security that should be treated with the same amount of priority as policymaking in the future.